How does multimodal AI compare to unimodal AI? Explore the key differences and see how it’s transforming online fashion search!

by YesPlz.AIFebruary 2025

2024 marked a turning point for multimodal AI, making it one of the most important AI trends of the year. Its impact is set to grow even further in the upcoming years. Accordingly, the market for multimodal AI is estimated to grow at a CAGR of 32.7% from 2025 to 2034.

Why is multimodal AI gaining so much attention? It makes interacting with technology as easy and natural as chatting with a friend.

Take AI fashion search, for example. Finding clothing online used to be frustrating. People had to think of the right keywords. What if shoppers can simply type what’s on their minds and get spot-on results? That’s the power of multimodal AI when it acts as an underlying technology for eCommerce search.

In today's article, let’s learn what multimodal AI for fashion is and how it helps online shoppers communicate with search engines more effectively. This article will cover:

Unimodal AI vs. Multimodal AI: Key Differences

Applications of Multimodal AI for Fashion

To fully understand what multimodal AI is, we first need to see how it differs from unimodal AI. Let’s break it down in this section.

Unimodal AI handles a single type of data, or a modality, at a time: text, image, audio, or video. Most traditional search engines work this way. One of the outstanding examples is Algolia. It is one of the popular search engines in eCommerce. But it’s unimodal, meaning it only processes text.

Here’s how an unimodal search engine like Algolia works:

Shoppers input text into a search bar.

An unimodal search engine matches shoppers’ input words with product text data.

It returns the most relevant products based on keyword similarity.

This unimodal approach makes a traditional search engine excel in speed and specialization. However, processing only one data type causes it to lack context understanding as well as supporting information. As a result, it might return less accurate products.

For instance, a query for “dress shirts” might pull up dresses instead of formal men’s shirts. It seems the search engine matches the keywords. Yet, it doesn’t understand shopper intent.

Is this a problem your online fashion store is experiencing? Find how to solve it in AI Site Search Strategies for Fashion eCommerce.

Is this a problem your online fashion store is experiencing? Find how to solve it in AI Site Search Strategies for Fashion eCommerce.

First, let’s think of how we, as humans, communicate. We don’t rely on words alone. We also use facial expressions, gestures, and tone of voice. Multimodal AI works the same way. It processes multiple forms of data at once. For this reason, multimodal AI helps humans interact with technology more naturally and effectively.

Next-gen eCommerce search engines are powered by multimodal AI. Take YesPlz AI fashion search, for example. This multimodal search engine processes both text and images at the same time. So, it understands more complex shopping queries and returns richer, more relevant results.

Up next, let’s dive into how multimodal AI for fashion works.

Each fashion item in a product catalog includes two types of data:

Text Data: Product titles, descriptions, and tags.

Visual Data: Product images.

Unimodal search engines overlook the second type because it only deals with text. Meanwhile, multimodal AI for fashion search engines processes both text and visual data through their respective encoders.

Unimodal search engines overlook the second type because it only deals with text. Meanwhile, multimodal AI for fashion search engines processes both text and visual data through their respective encoders.

It works through 3 steps:

Understanding fashion shoppers’ search queries.

Processing product data.

Delivering results based on text matching (keyword similarity) and visual matching.

Understanding Search Queries

Understanding Search QueriesShoppers feel free to type what’s on their mind into a search bar. Their search terms instruct the search engine to find and match relevant fashion items in a product catalog.

Text data is processed through a text encoder. It matches shopper queries with relevant products based on similar words or phrases.

Visual data is processed by an image encoder using computer vision. It scans each product image in the entire catalog to understand what’s included. The information extracted from the images will supplement the information missing in the text data.

Take a look at the image below. Fashion retailers or someone in their team might forget to update important product attributes such as black color, ¾ sleeves, etc. But thanks to the ability to read product images, multimodal AI for fashion can extract this information automatically. This fills in missing data, ensuring that shoppers get accurate results.

Conclusion

ConclusionRetailers want better search recommendations and smarter product discovery for their shoppers. The application of multimodal AI for fashion will help them reach their goal. But most don’t have the data, technology, or resources to build their own.

For those looking to implement multimodal AI without extensive resources, YesPlz AI offers a solution that:

Seamlessly integrate into eCommerce platforms.

Adapt to unique use cases and different business sizes.

Enhance search accuracy with a deep understanding of both text and images.

With the underlying technology of multimodal AI, fashion search engines evolve from simple keyword matches to intelligent, style-aware assistants that understand what shoppers really want.

Ready to transform your fashion search experience? Book a demo today and see the power of multimodal AI for fashion.

Written by YesPlz.AI

We build the next gen visual search & recommendation for online fashion retailers

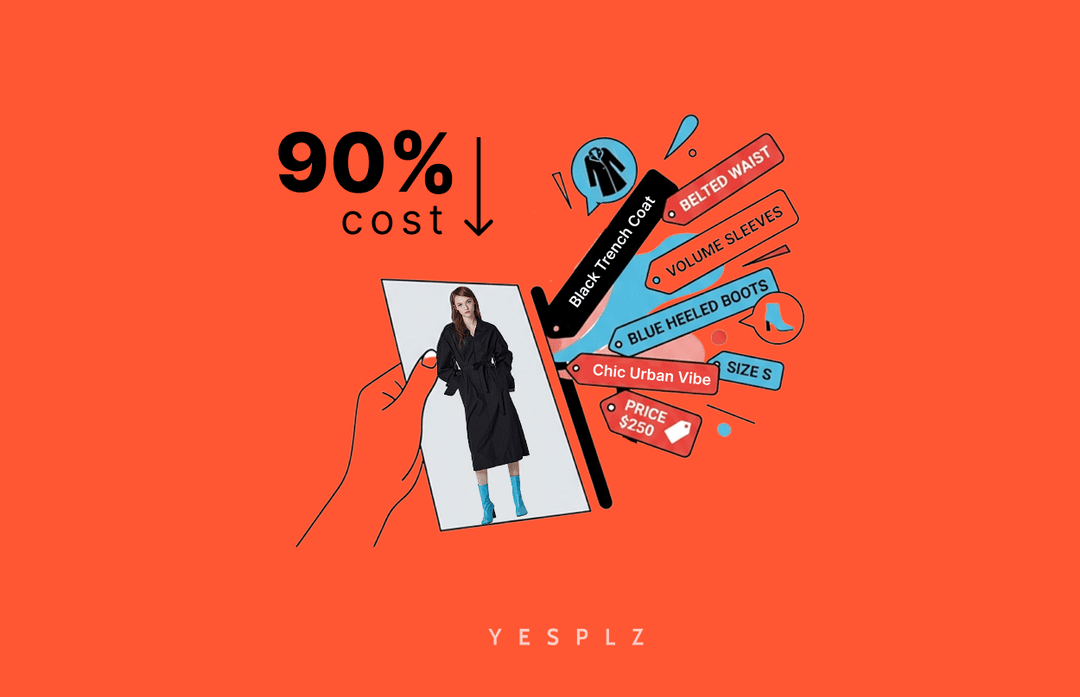

Inconsistent tags hurting sales? Self-service product tagging delivers accuracy and speed without enterprise prices. Tag products 10x faster at 90% lower cost.

by YesPlz.AI

We analyzed 13,374 fashion searches. AI tagging increased product discovery by 22% and boosted clicks by 11%. Here’s what the data revealed.

by YesPlz.AI